Operations

Core Area 1: In this section, I discuss my approach to managing operations within a learning technology environment.

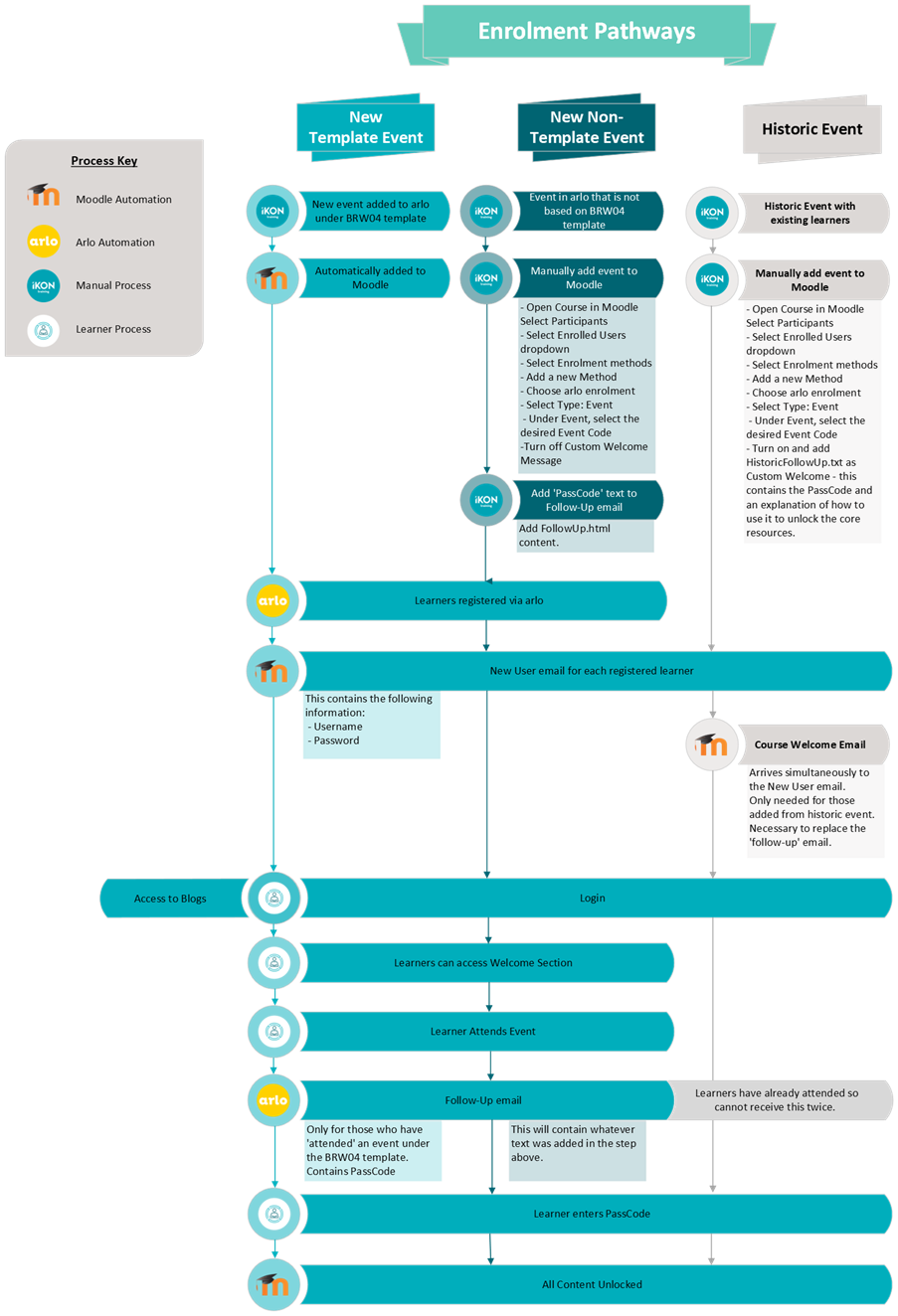

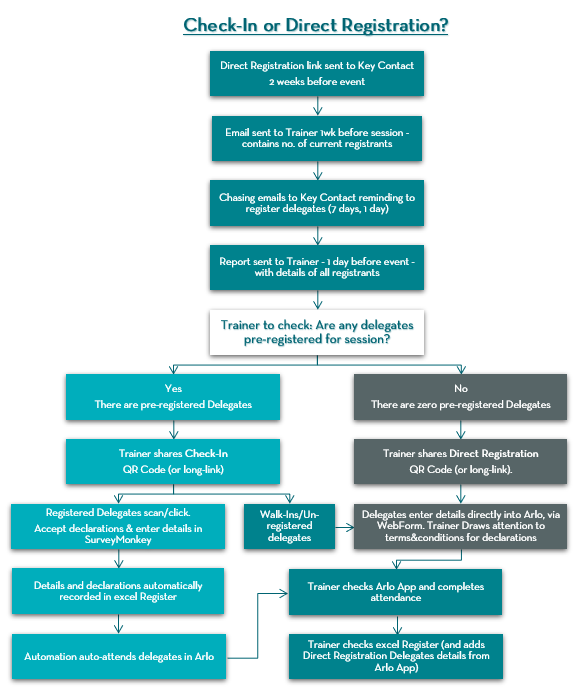

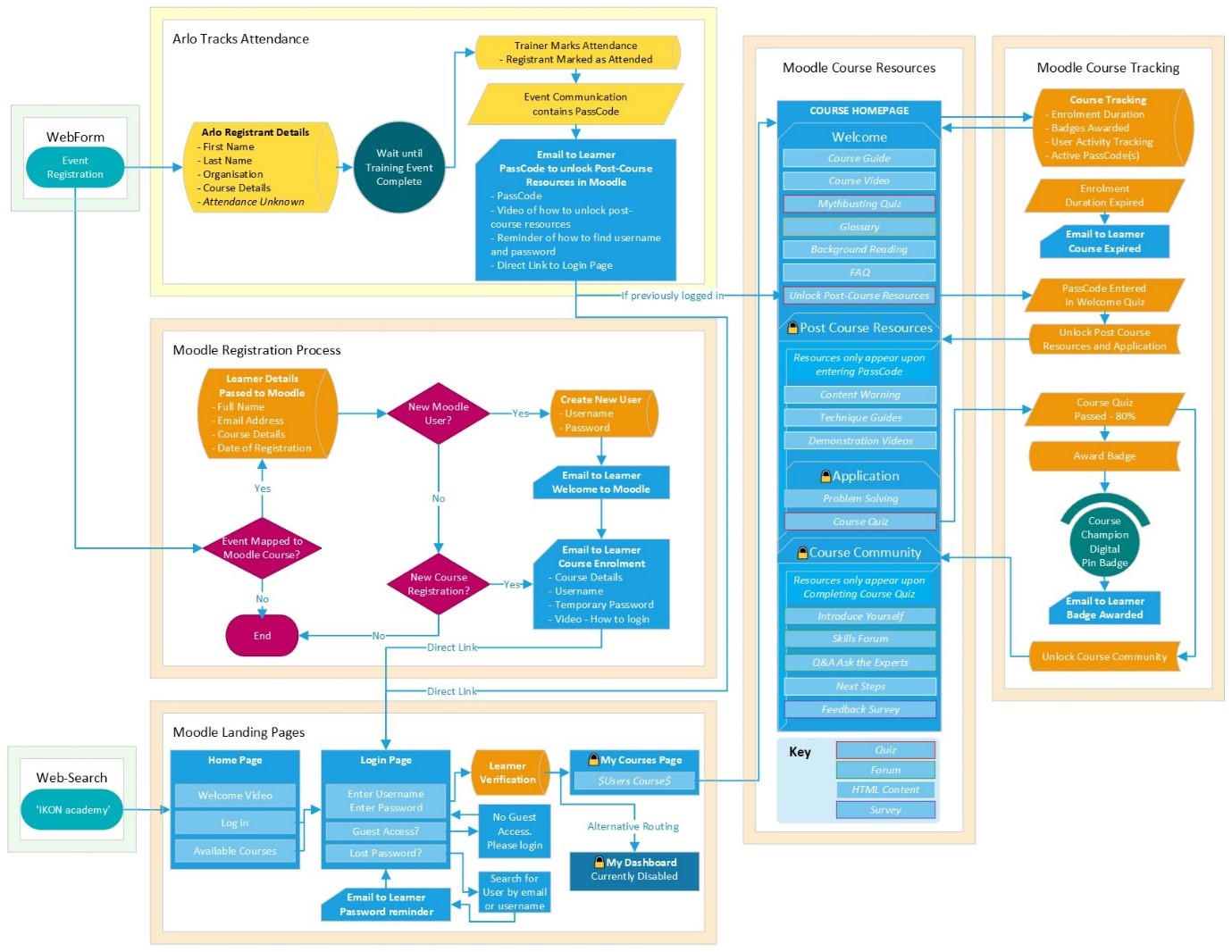

Evidence of Mapping the Processes around Enrolment

Process map for the development of the online platform. Created in Visio. Designed to enable the explanation of the process.

Core Area 1a An Understanding of the Constraints and Benefits of Different Technologies

Digital Development Strategy

In my first year at IKON Training, I was given the responsibility of implementing a fully integrated Training Management Software (TMS). To effectively manage this complex process, I developed the Digital Development Strategy, using the ADDIE approach as the structural backbone. This structured approach—Analyse, Design, Develop, Implement, and Evaluate—ensured that each phase of the project was meticulously planned and executed, aligning with both organisational needs and technical requirements. I found additional benefits in this approach as it helped to delineate each stage of development for the rest of the team, and gave a sense of measured and sustained progress. It also gave me a clear, well-structured methodology for approaching the early stages of the DDS.

As the company was intent on a short timeframe (under six months), this led me to choose to implement a range of commercial software. As requirements evolved, this led to the integration of multiple software solutions, including Arlo, SurveyMonkey, Power Automate, SharePoint, and more. It was challenging to align a functional TMS with IKON Training's business practices, but this was achieved within six months. The full suite of software was tested and launched in April 2023.

ADDIE Methodology

Analyse Phase:

The Analyse phase involved an in-depth fact-finding mission to identify potential barriers, user requirements, and the technical skills necessary for success. My focus was not only on understanding the gaps within our operational processes but also on assessing my own skills and the resources available to ensure that the project could be delivered successfully. This phase also required me to conduct extensive research into legal requirements surrounding data storage, particularly GDPR compliance. At the time, IKON lacked a coherent data privacy policy, which prompted me to draft, amend, and publish a comprehensive policy to ensure that our practices aligned with regulatory standards.

Another key aspect of this phase was engaging with stakeholders—including trainers, administrative staff, and external IT personnel—to understand their specific needs. Through interviews, surveys, and workshops, I gathered insights into existing obstacles and desired outcomes. A lot of time was spent in scoping meetings with the directors, particularly the Operations Director. Before I started at IKON, they had been involved with an external development company that had produced lots of documentation on their own efforts to create a bespoke TMS. This information was critical as I was able to steer away from the mistakes and dead-ends they had tried. It also led me away from my early attempts to develop a solution in-house: if a team of developers had not been able to deliver, it lowered my confidence that I could do this entirely independently. Meeting with the Operations Director and reading the pre-existing documentation shaped the direction of the Digital Development Strategy, ensuring that the chosen solution would be well-received and effective in meeting the organisation's goals.

Design Phase:

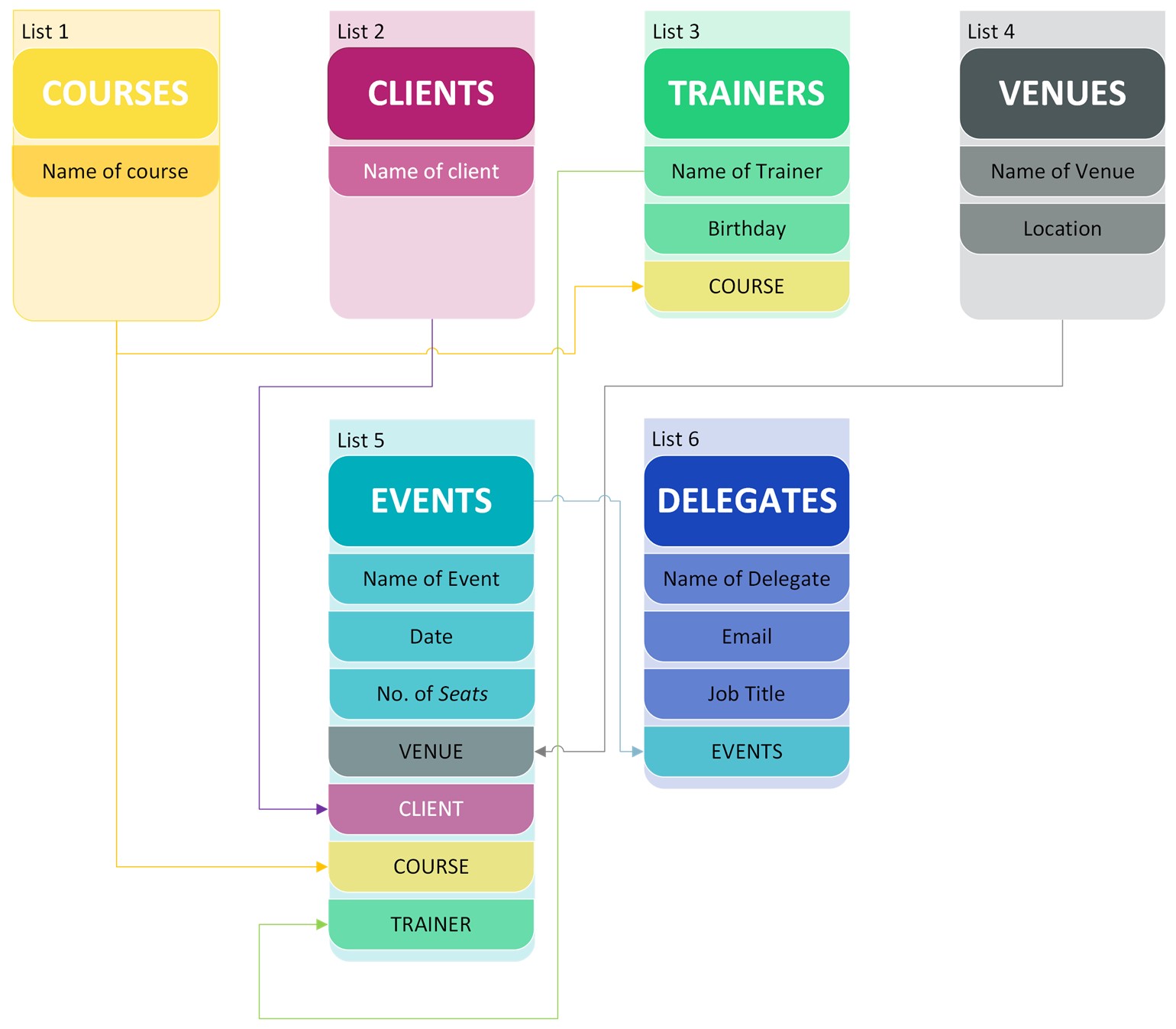

In the Design phase, I designed a data system to handle information about clients, delegates, and training events, with a strong emphasis on GDPR compliance and data security. This involved designing workflows for data entry methods that would work with IKON's business model, data processing, and retrieval that adhered to legal standards while maintaining user-friendliness. I evaluated whether an internally developed storage system or an external solution would be more appropriate.

An important element I considered was the scalability of the solutions on offer. I looked at the number of events currently offered by IKON and considered the potential growth, based on the available number of other clients in that sector. I then scaled up the number of learners and the growth in the amount of data storage that would be required. With these calculations in mind, we ruled out software solutions that had a higher per-learner cost, with some solutions costing over £2 per learner. And although we were not ready to develop one at that time, we also ruled out softwares that did not have integrations with an LMS, as that was considered part of the future growth of the company. The data system had to be scalable in terms of cost so that the value of the training far outweighed the costs of the system, with the potential to gain more advantage from integrating other system benefits, such as the integration of Moodle.

In addition to the data system, I designed the integration pathways between various software platforms. This included mapping out how Arlo would communicate with other tools like SurveyMonkey, SharePoint and a future integration with Moodle, as well as planning, designing, developing, testing, and deploying the automation workflows that would streamline data transfer and reduce manual input. The design phase was also an opportunity to create user interfaces and support materials that would make the system accessible to all stakeholders, regardless of their technical proficiency.

After thorough consultations and analysis, the Directors chose to implement Arlo, an external platform, due to its enhanced resilience, security features, and scalability. Leveraging an external partner allowed us to benefit from industry-standard security measures and ensured that the system could grow alongside the organisation's evolving needs.

I found my skills at creating visualisations of data-flows to be of particular use in the design phase. The directors wanted a way of visually comprehending, not only how the system would look to users, but also of how the data would flow from one area of the business to another.

Development Phase:

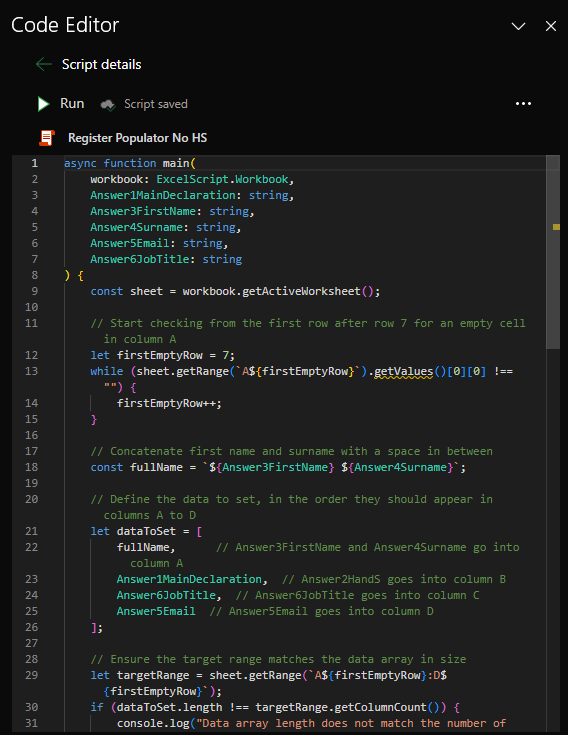

During the Development phase, I focused on automating data capture and distribution in a test environment, integrating multiple software solutions including Arlo, SurveyMonkey, Zapier, Power Automate, and SharePoint. This phase was particularly challenging as it required aligning these commercial off-the-shelf products with IKON's specific business practices. For instance, I used automation software to create custom automations that generated custom emails comprising key event data and registration links from the Arlo database, enabling seamless data transfers and ensuring that learners were automatically granted access to relevant resources.

One of the most complex aspects of this phase was ensuring interoperability between the different platforms. Each system had its own unique set of APIs and data structures, which required careful planning and customisation. I developed several custom scripts and workflows to facilitate communication between these systems, ensuring that data integrity was maintained throughout the process. Additionally, I created training and support resources to help staff adapt to the new system, including step-by-step guides, video tutorials, and an internal knowledge base hosted on SharePoint.

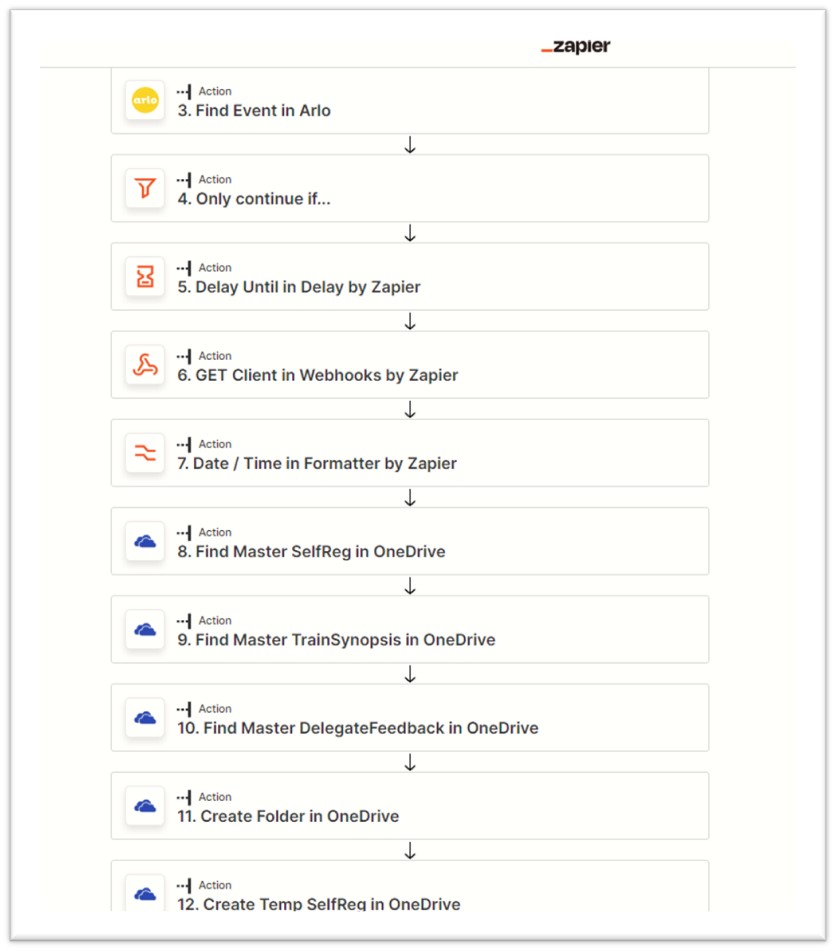

The most difficult stage was the creation, population, approval of, and distribution of the Admin Documents. These are a series of three reports that go to clients with the list of registrants, their feedback on the event, and the trainer's feedback on the event. Before the Digital Development Strategy, the creation of these reports was entirely manual. I first created two automations in Zapier, each with 24 steps. As Zapier was not able to sufficiently handle branching automations, I needed two separate versions of this automation as some events required sending a different survey to the learners, reflecting the more physical nature of some of IKON's training. This proved both ineffective and expensive.

I realised that I would have to move the automations into a single process in Power Automate. This solved the issue with branching: easily handled by Power Automate. The other issue was that Zapier could only create documents in OneDrive, not in SharePoint, meaning that all the admin was being created in my personal OneDrive, which was not a resilient solution. The whole process would fail if I were to leave the company, so I had to transition to the creation of the admin documents in SharePoint instead: this meant that permissions could be granted to enable anyone within the company to access the admin.

One difficulty I faced with using PowerAutomate, as compared to Zapier, was that populating the documents with the details of the registrants and the output of the SurveyMonkey forms was more difficult than in Zapier, which contained a native integration. In Power Automate, I had to create a custom API request, which required me to activate a developer account in SurveyMonkey. I then had to create custom automation scripts in Excel which populated the reports. The reports were all generated in Excel as this was the practice preferred by clients. I had initially considered creating a reporting portal that would enable clients to log in and look at all their data across all their events, with downloadable reports for each individual event. However, this approach was not explored as qualitative feedback from the Evaluation Phase suggested that clients appreciated the simple workflow, with Excel reports being sent by email.

Implementation Phase:

The Implementation phase saw the launch of the new system in a live environment. I ensured all users received consistent support during this period, hosting much of the learning content on the IKON SharePoint site and utilising Teams for notifications and updates. I organised training sessions and drop-in clinics to address user concerns and facilitate a smooth transition. These sessions were tailored to different user groups, ensuring that trainers, administrators, and other stakeholders received the specific guidance they needed to perform their roles effectively within the new system.

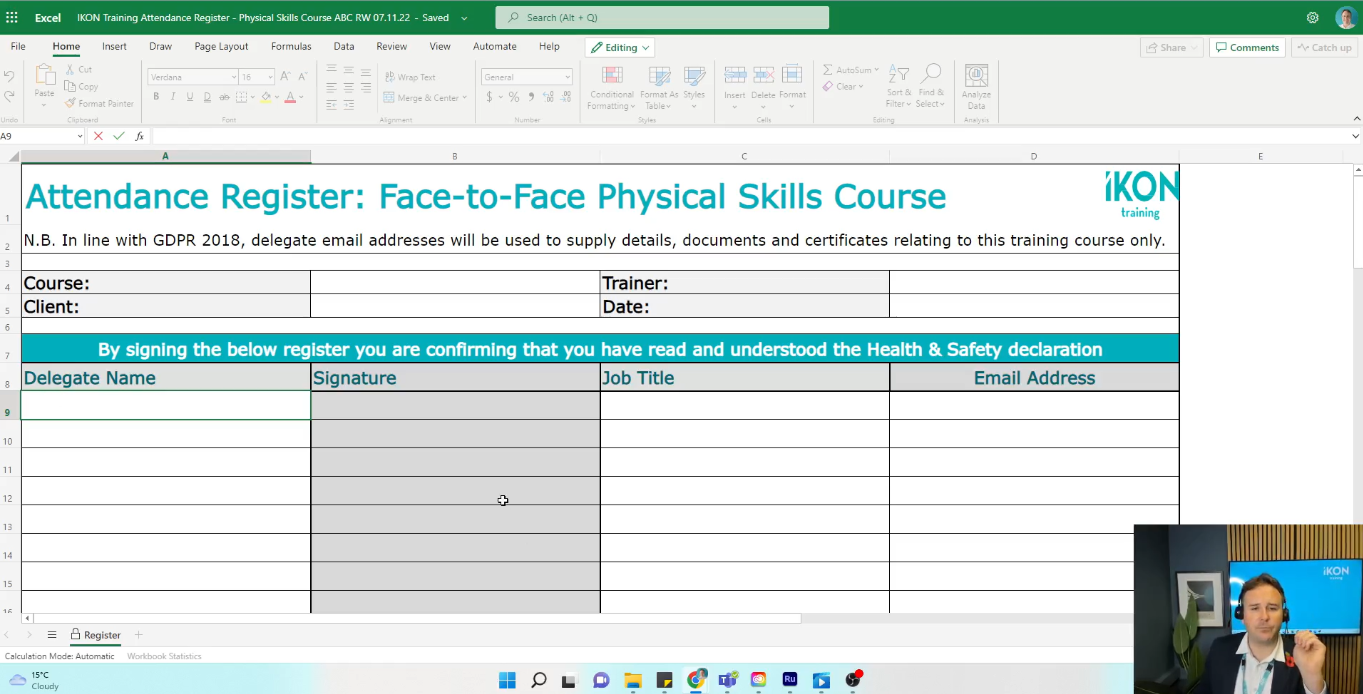

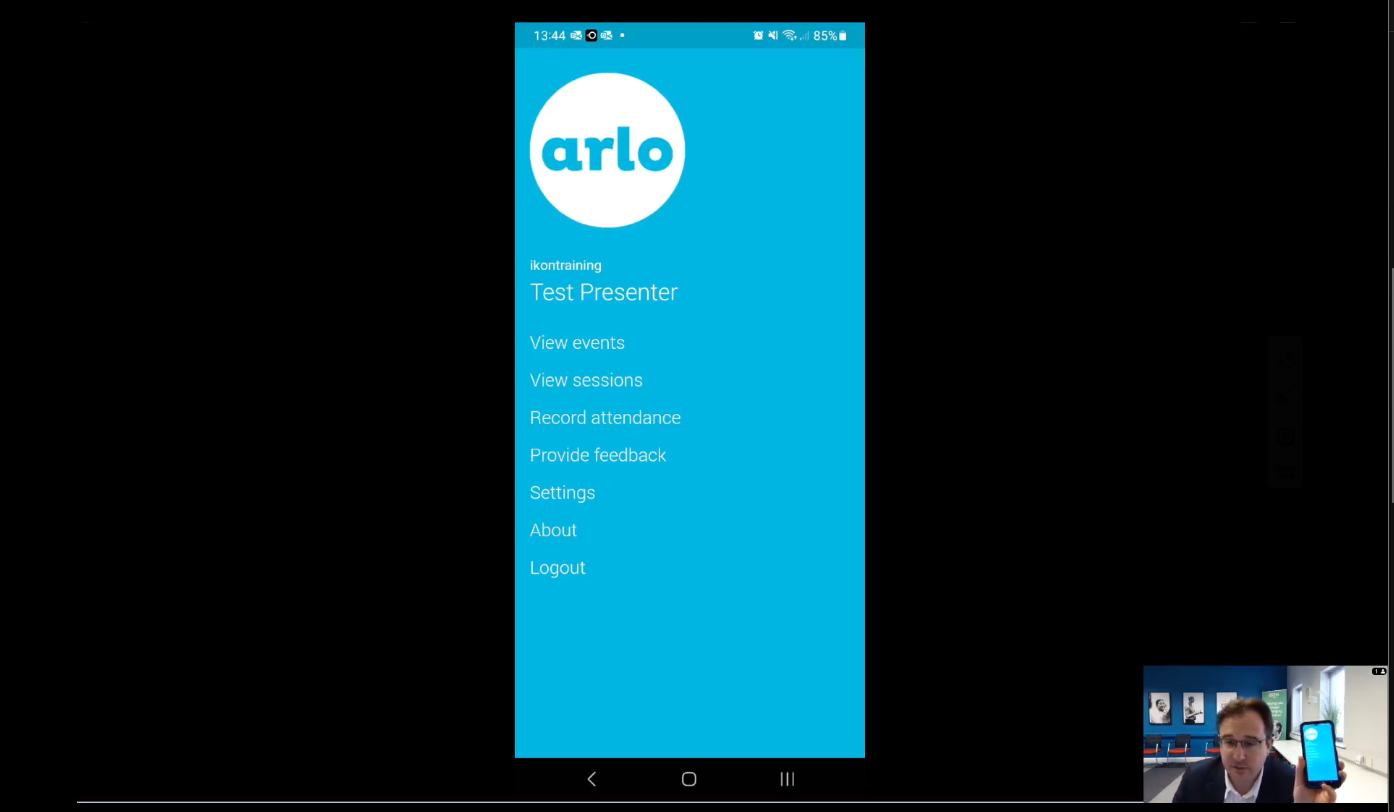

A significant challenge during implementation was managing resistance to change. Some users were hesitant to adopt the new system, preferring the familiarity of legacy processes. One of the areas of tension was the use of the Arlo-For-Mobile App, which was only available to use on a mobile phone. IKON Trainers had never had to use their own private devices for work purposes. Although we discussed providing company devices, this was ruled out due to costs, and the difficulty of trainers having to carry an additional device with them when they already carry a company laptop. To address this, I employed a combination of formal training and informal support, including one-on-one coaching sessions and real-time troubleshooting for the use of the Arlo app. I monitored usage and provided support where trainers were not using it correctly. I liaised with our external IT company to ensure that the personal devices were covered within the scope of our GDPR and cyber-security protocols, including the Cyber Essentials framework. This approach helped build user confidence and ensured a higher adoption rate of the new technology. I also implemented a feedback loop, allowing users to report issues and suggest improvements, all of which were considered and many of which were actioned. This helped in refining the system and fostering a sense of ownership among stakeholders.

Evaluation and Adjustments:

Following the implementation, I entered the Evaluation and Adjustments phase, where I conducted regular reviews of the system to identify areas for improvement. This included refining workflows, such as updating the certification expiration process to make it more efficient and optimising the learner registration pathway to reduce the time required for enrolment. I used a combination of user feedback, system analytics, and direct observation to identify inconsistencies and areas for enhancement.

One key adjustment was the automation of routine administrative tasks, such as generating attendance certificates and sending follow-up emails to learners. By refining these processes, I was able to free up significant time for the administrative team, allowing them to focus on more value-added activities. I also conducted periodic training refreshers and created additional support materials to address common issues that emerged after the initial rollout. One unexpected outcome was that many of our learners and our clients actually felt bombarded by email reminders. I worked to reduce the number of reminders and reworked the wording and details to reduce the number of emails but increase their effect. Wherever possible, I tried to combine emails coming for different purposes, although this was not always achievable due to emails coming from different software origin points.

The feedback from stakeholders was overwhelmingly positive, however, there were certain areas where we sought to make improvements based on feedback:

- Reduced the frequency of email reminders to reduce learners feeling overwhelmed by communication.

- Created simple 'click here to amend registrations' buttons for commissioners to enable easier ammending of registrants.

- Enabled the sending of Trainer Synopsis hyperlink before the end of the session, so trainers can complete the synopsis of early-finishing events before leaving the venue.

Throughout the entire ADDIE process, my focus remained on ensuring robustness, interoperability, support, compliance, collaboration, and consistency—key values of our Digital Development Strategy. My work in steering this strategy involved a deep understanding of the technical, legal, and operational aspects of learning technology implementation, showcasing my ability to engage thoughtfully with both the broader legislative context and the specific operational demands.

Reflective Comment on Software Integration

The decision to integrate Arlo as our TMS significantly enhanced operational efficiency, though it came with challenges. Initially, I was hesitant to move away from a bespoke PowerApps and SharePoint solution because of the control it provided. However, the tight timeframe and my growing understanding of the complexities involved in building a bespoke system led me to choose Arlo. I was also aware of the vulnerability of creating a system without an outside agency to support me: not only would that make it slower for me to create a product, there was a higher likelihood of errors or bugs, as well as the reduced scrutiny of a dedicated legal support team. By using an external product, these negative outcomes were lessened, but the off-the-shelf nature of the external product brought different challenges.

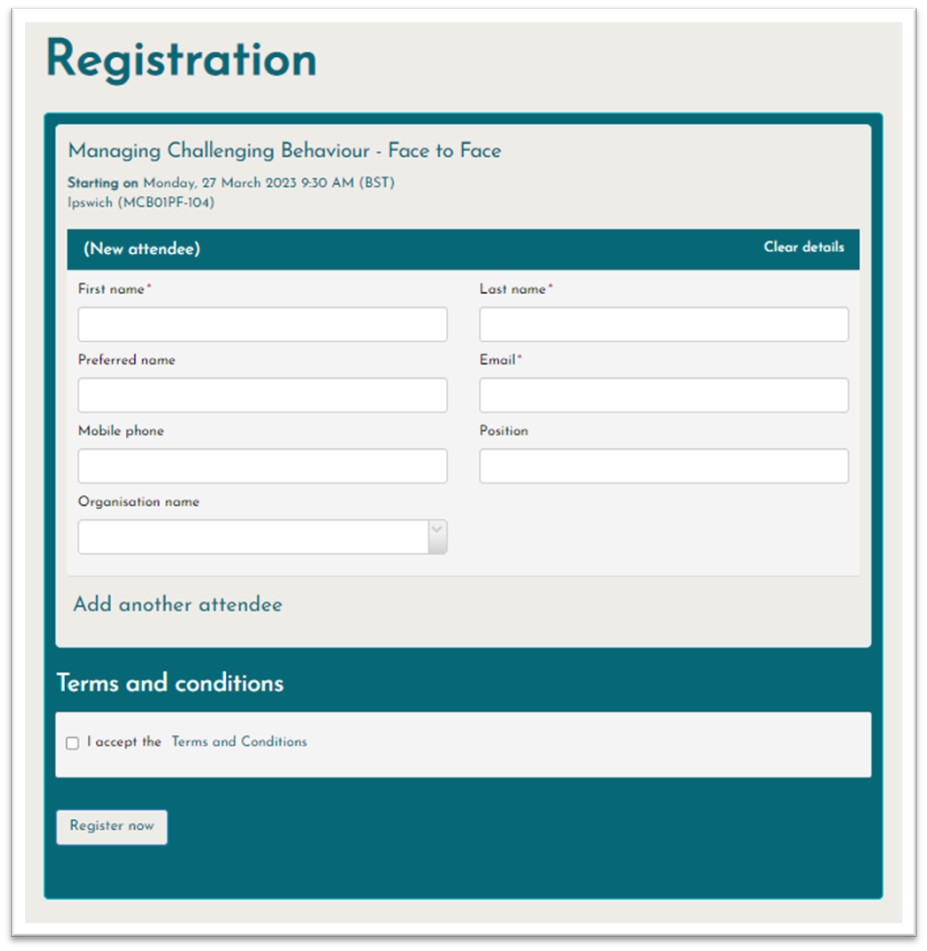

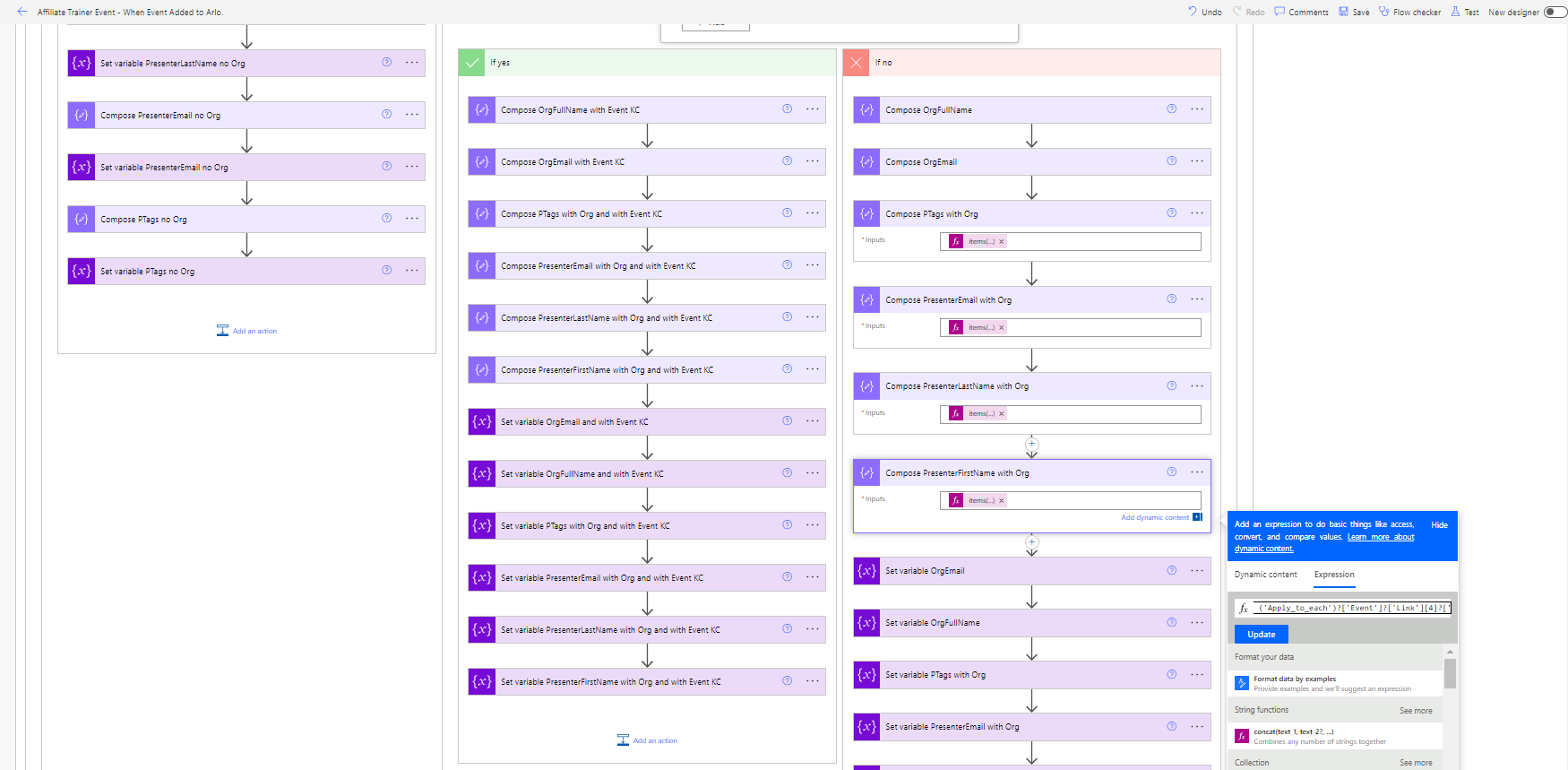

Early testing revealed significant misalignments between Arlo's intended use case—hosting public events where individuals self-register—and IKON's requirements, where client commissioners register their learners for private sessions. This necessitated me to use automation tools like Zapier, Power Automate, and Azure Logic Apps to create custom workflows that enabled Arlo to meet IKON's unique needs. These included automations that sent registration links directly to commissioners and reminders ahead of events, as well as automations that generated populated reports from data in Arlo and SurveyMonkey. These reports were sent to the administration team for approval before being forwarded to clients, ensuring accuracy and saving hours of administrative effort. The impact of these automations was very high: without them, the Digital Development Strategy would have failed as it would not have met IKON's needs to create a robust, interoperable TMS. However, the creation of these automations, and which software to use, created further considerations and challenges.

Future Developments:

Reflecting on the integration process, I learned the importance of adaptability and creative problem-solving when working with off-the-shelf software. The initial challenges with Arlo required me to think outside the box and leverage various automation tools to bridge the gaps between what the software offered and what the organisation needed. This experience also underscored the value of continuous testing and iteration, as the feedback from early users was instrumental in shaping the final solution.

I have learnt that in-house developed solutions are simpler to control, and enable greater levels of customisation. But it creates vulnerability as only the in-house developer(s) know how to operate and amend the process. There needs to be a balance between external software and internal customisation, as well as clear documentation including visuals. In future, I will try to create wiki-style documentation with flowcharts for any automated processes I develop, so that others within the organisation can learn how to amend or adapt the process. This may be wishful thinking, as a certain level of technical knowledge and ability will always be required, but the process of documentation, and the accountability of doing so achieves the following goals: It reduces vulnerability, enables future developers to take over where I leave off, and it also provides me with a reflective opportunity to look at processes and automations from a higher viewpoint. The ideal pathway to take it to use a mixture of off-the-shelf software; to save development time and reduce single-points-of-failure, and some in-house custom-development which is well documented.

I feel that it is the role of a learning technologist to always stay aware of the latest developments in learning technology solutions and to keep their skills current in the core software. This approach allows us to balance our experience of developing solutions using existing tools and technologies with the advantages of adopting new and innovative software solutions. For example, as AI has risen in prominence in this area, it seems like a logical next step for creating learning materials and solutions. However, I am wary of over-reliance on external solutions, especially when considering issues such as data privacy, intellectual property, and the complexities of integrating AI-driven tools with existing systems. Moreover, there are significant sustainability concerns, such as the high energy consumption and environmental impact of data centres that power AI and other cloud-based technologies.

In the future, a responsible approach as a learning technologist involves not only leveraging these advancements for efficiency and scalability but also carefully evaluating the operational, ethical, environmental and financial implications of their use. It's essential to critically assess whether these technologies truly enhance learning experiences or if they are being adopted for the sake of trend-chasing. By maintaining a balance between innovation and sustainability, we can ensure that we are providing learners with impactful, future-proof solutions while contributing to the longevity and well-being of the educational ecosystem as a whole.

Core Area 1b Technical Knowledge and Ability in the Use of Learning Technology

Technical Integrations Across Multiple Platforms

In my role, I've used a number of different tools and platforms to support operational processes and learning delivery. This section goes beyond the Training Management System (TMS) to look at other technologies I used, and what I learned from using them. These tools included Arlo, Power Automate, Zapier, Azure Logic Apps, and Moodle API integrations, each of which presented different challenges and opportunities for developing my skills.

From Arlo to Automations: Improving Workflows as My Ability Increased

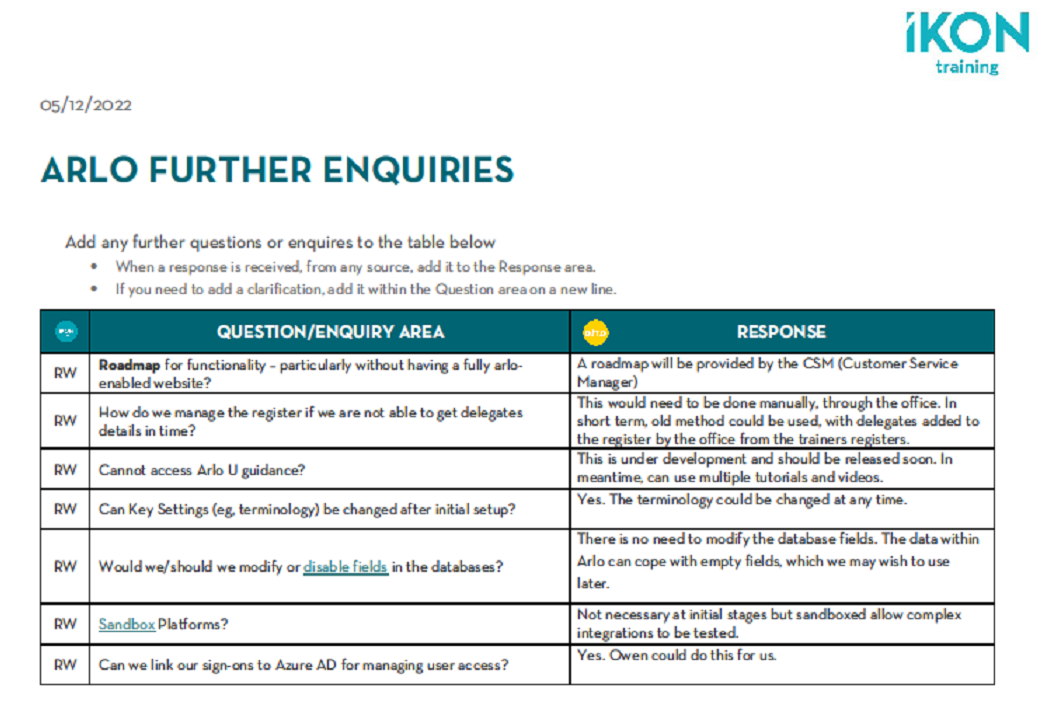

One of the main systems I worked with was Arlo, which we chose because of its ability to adapt to our needs and scale with the business. However, it wasn't a perfect fit for our specific processes. Initially, my efforts focused on communicating with the customer service support team at Arlo to request platform adaptations to meet our needs. I created a collaborative document for our team to communicate the elements of the Arlo TMS that were not meeting our requirements. Despite clear communication, the standard Arlo platform was not able to fulfil our process needs. This led me to explore the automation approach.

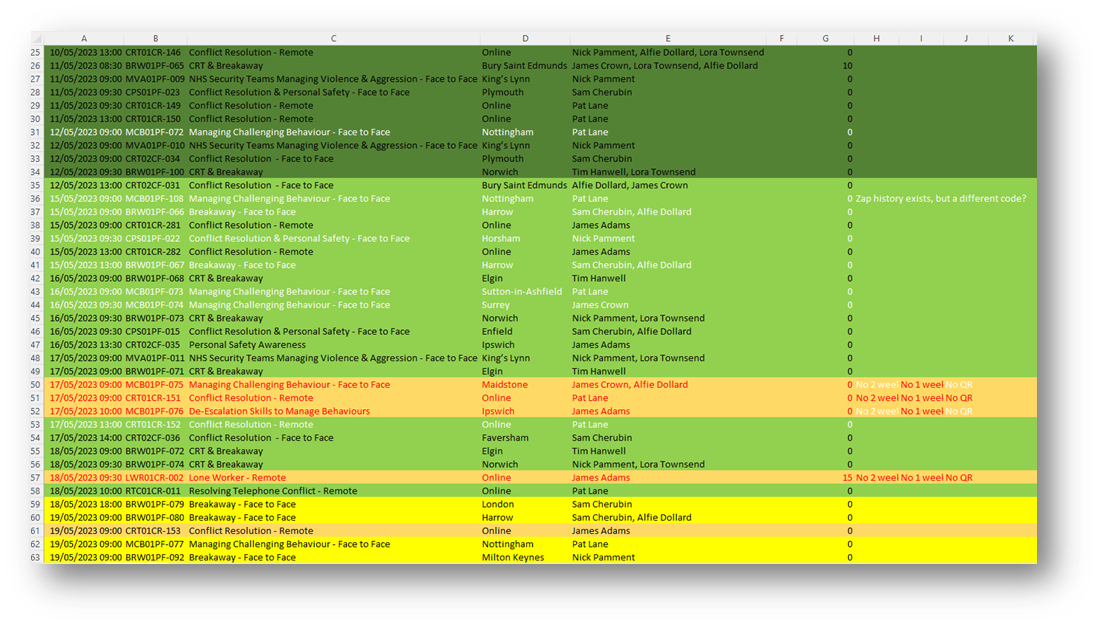

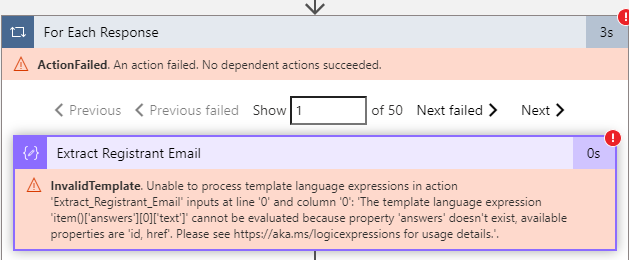

Arlo's documentation suggested that it would be simple to set up custom automations to bridge these gaps. Their preferred automation service was Zapier, which had a native connector enabling me to link Arlo with other tools like SurveyMonkey. I worked with Zapier for around six months, receiving training from one of Arlo's Zapier experts to create automated workflows that would better suit our requirements. I worked to develop almost thirty different automations in Zapier. However, despite significant effort, there were many limitations in the flow of data, and vulnerabilities emerged due to the constraints of Zapier's 'no-code' structure. For instance, automations couldn't run for more than a month, which didn't fit our planning cycles. If an event was booked more than a month in advance, the automated communication for learners and clients would not be sent. I monitored event communication for a period of over six weeks, necessitating the creation of a this colour-coded spreadsheet to track the incidence of event communication sending with or without errors/delays or re-triggering. As you can see, there is a significant number of events that are not 'green', meaning that the actual automations would need manual checking for each and every trigger. This was not acceptable and required a solution that reduced the incidence of manually checking whether automations had worked or not.

Additionally, there was an issue with all admin documents being stored on my personal OneDrive, as Zapier lacked the ability to connect to a shared drive, such as SharePoint, meaning that the creation of documents was a vulnerable process (when it worked) as the documents created were meant to be shared files. Over time, I became increasingly convinced that Zapier was not suitable for the long-term resilience of our operation processes, particularly as the costs spiralled with the number of automations.

Solving the Integration Issue

My initial thought was to explore other TMS providers from the list, hoping they might offer a better solution. However, given that Arlo had promised a 'seamless' integration, I became sceptical of other providers' marketing claims. I also revisited the PowerApps solution I had begun at the start of the project, before choosing Arlo. While PowerApps offered a high degree of customisation, I quickly realised that bringing it to the same level of functionality as Arlo would require not only significant time but also considerable upskilling on my part. The learning curve and time investment simply wouldn't have been viable given the project's timelines. This experience highlighted an important balance: the cost of investing time to learn new skills must be weighed against the financial implications, both in terms of salary and project deadlines. Sometimes, even if a solution is technically possible, it may not be the most practical or cost-effective choice.

In the interim, I began to experiment with Power Automate, which was included in our Microsoft 365 licence. There had been several 'flows' created before I joined the organisation that had stopped working. As I worked to fix/replace these, I learnt more about the value of this automation suite. As PowerAutomate is included in the 365 licence, this meant there were no additional costs. I explored how to customise and extend the existing automations within Power Automate, learning how they worked. I studied several online courses on Power Automate via LinkedIn Learning, which significantly improved my confidence. I then began experimenting with duplicating the Zapier automations in Power Automate, with the goal of transitioning them away from Zapier.

I extensively read the Developers' Documentation from arlo, seeing if this would provide guidance on how to 'fix' the issues with the Zapier automations. As I read more on this, I discovered that all the automations relied on API calls the arlo 'back-end'. I had learnt how to make API calls within my Web Development training, so I attempted to employ these skills to develop a solution to the automation issues.

Making API Calls

I collaborated with a small group of my former course-mates from my BSC in Computer Science. We worked on how to conduct API calls to Arlo from within Zapier. This process was complex, requiring JavaScript code hosted within the no-code editor. Despite rigorous testing, the Zapier-Arlo API integration was unreliable due to the unpredictable structure of Arlo's API and it's use of custom variables.

However, as I learned more about Power Automate, I found the PowerAutomate HTTP connector far simpler and easier to implement. Once I had learnt how to use this, I leveraged the data parser function to organise the XML data outputted from successful Arlo API calls. Power Automate's JSON Parser was user-friendly, providing a helpful function to create a template based on sample input.

I referred to Arlo's developer documentation to understand the limits of available API requests. This enabled me to make use of filters and sorting mechanisms, simplifying the processing of API data after parsing. I also had to build in various conditions to prevent flow failures and unexpected outcomes. Over the course of a year, I successfully transitioned the majority of automations from Zapier to Power Automate, reducing costs and improving the functionality of workflows. The Power Automate flows were more reliable, had no time limits, and did not incur additional costs. To ensure resilience, I frequently consulted with our external IT providers to confirm that the automations would function as expected, as Microsoft's documentation on automation limitations was unclear.

By far, the most complex aspect of the transition was automating the admin process. This had been two 24-step 'Zaps' in Zapier, but I managed to condense it into a single automation within Power Automate. Additionally, I introduced a Teams approval process. Unlike the Zapier workflow, which simply created admin documents, the new process enabled the Operations team to inspect the documents via a Teams notification. If they met the required standards, the documents could be emailed to the client with the click of an 'Approve' button.

Lessons from Transitioning from Zapier to PowerAutomate

Moving everything to Power Automate took time, but it proved to be a more practical choice. Unlike Zapier, Power Automate could handle more complex workflows, was included in our existing licensing agreements (saving costs), and was better integrated with SharePoint, meaning data could be accessed by others in my absence. This transition taught me a great deal about evaluating the value of different platforms—not just from a technical perspective, but also in terms of business continuity. If I had the opportunity to begin again, I would try not to use Zapier, as although it is 'simple' the restrictions are almost as high as the cost. In an ideal world, a software solution would not require additional automations to enable it to function. If I needed to create automations in the futurewithout access to Office 365, I would consider other platforms.

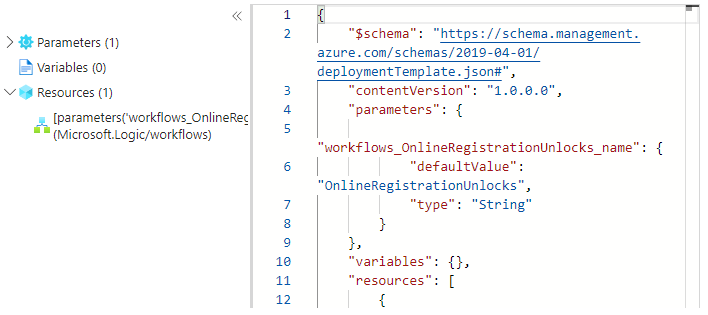

Exploring Azure Logic Apps

After becoming more comfortable with Power Automate, I decided to explore Azure Logic Apps as an alternative for building automations. Logic Apps had some benefits: it was faster to develop with, thanks to features like the AI-powered code checker, and it provided better error messages, which helped me diagnose issues more easily. I intially built some flows using Logic Apps to link data between Arlo and Moodle, ensuring that only learners who had attended their courses had access to the Moodle resources. However, it turned out that Logic Apps was more expensive to run, and the unpredictable costs made it hard to justify compared to Power Automate, which was included in our licenses. Despite this, the time I spent using Logic Apps gave me a better understanding of automation design and coding workflows from scratch.

Comparison of PowerApps and Arlo as a TMS

PowerApps provided a high degree of customisation, ideal for bespoke solutions tailored to our specific organisational needs. However, it required significant maintenance, development time, and technical input, which would have been resource-intensive given our time constraints and my relative inexperience with the software. In contrast, Arlo offered a comprehensive, off-the-shelf solution with robust capabilities and integration options. The key challenge was adapting Arlo to fit IKON’s requirements—something I addressed by building custom automations to extend its functionality. This experience underscored the value of leveraging established platforms to reduce development time and ensure reliability while balancing the need for customisation through additional automations. However, the negative outcome of this is a 'Frankenstein' style system which, although streamlined and robust, is difficult to document.

The comparison between these two approaches provided valuable insights into the trade-offs between custom-built and commercially available solutions. While PowerApps offered greater flexibility, the time and resources required to maintain and update a bespoke system were significant. On the other hand, Arlo’s ready-made features allowed for faster implementation but required creative workarounds to meet our unique needs. There is an ongoing subscription cost with using external software, but the reduced onsite maintenance is a clear benefit. This experience has informed my approach to future projects, where I now place a greater emphasis on scalability, sustainability, and the ability to integrate with other systems when selecting technology solutions.

Comparison of Zapier and Power Automate for Automation Creation

Zapier's user-friendly interface and extensive app integrations made it an attractive option for automating workflows—it was the suggested method of customising the Arlo platform. However, its cost and limitations, such as a delay that would time out at one month—meaning that any event planned for more than a month in the future would not be subject to the AutoAdmin process described above—prompted me to explore Power Automate. Despite its steeper learning curve, Power Automate offered more robust and scalable automation capabilities, particularly for complex workflows, API calls, and integrations with Office 365 applications. Over the course of the first six months after launch, I moved all automations out of Zapier at a significant saving. This transition highlighted the importance of choosing the right tool for long-term sustainability and cost-effectiveness.

Another major advantage of Power Automate over Zapier is the ease of adding human 'approvals' into the process. Zapier has a separate portal for this, which requires login, whereas when a complex automation needs a point of checking, this can be done in Teams. I used this to great effect in the Admin workflow, which enables the operations manager to see, amend, and approve the administration documents before they are sent out to the client, all in one place.

Comparison of Power Automate and Logic Apps

During the integration process, I also experimented with Azure Logic Apps as an alternative to Power Automate. Power Automate can be slow to run, and even slower to load the graphical user interface designed to make the creation of automations easier for casual users. Logic Apps provided a highly flexible environment and allowed me to create advanced automations swiftly. I particularly benefited from AI-powered code checkers that made diagnosing and debugging errors more straightforward. I was able to create the basic structure of an automation in mere minutes using Copilot. However, I found that the unexpected costs associated with using Logic Apps quickly added up, with curious and unpredictable pricing structures, especially given the scope of the project. In contrast, Power Automate was included within our existing licensing agreements, making it a far more cost-effective option. While Logic Apps offered certain advantages, such as improved error handling, speed of development, and more detailed debugging capabilities, Power Automate ultimately provided the balance of cost-efficiency and robust functionality needed to deliver the project successfully.

By combining these technologies, I ensured that our systems were resilient, efficient, and capable of supporting the ongoing needs of our learners and stakeholders. My role in selecting and integrating these tools has significantly influenced our operational processes and demonstrated my capability to blend strategic decision-making with hands-on technical expertise. This experience has also deepened my understanding of the interplay between different technologies and the importance of adaptability in achieving successful outcomes in learning technology implementation.

Making API calls in other software

One of the more complex integrations I worked on involved linking Arlo with Moodle through API calls in PowerAutomate. My goal was to automate learner enrollments, so that when someone was marked as 'attended' in arlo, their details were automatically updated in Moodle, and they gained access to post-course materials. This integration required me to learn how to work with an additional API in a practical way: understanding the JSON formatting of Moodle, making requests with a different authentication process, and handling the different data structures of both systems. It was a steep learning curve at times, but by the end of the project I had gained a much deeper understanding of how different platforms connect and how to ensure data flows smoothly. I also experimented in making API calls to SurveyMonkey to see if this would work, and whether there were any efficiency savings to be made. However, those flows that made use of the HTTP Request to SurveyMonkey were re-factored as the PowerAutomate native connection was suitable and more stable than using a HTTP request. The HTTP request required the creation of a token, which would only last for a few weeks, whereas the main PowerAutomate to SurveyMonkey connector did not have these expiration problems. I learnt from this that although a custom API request can be made, a native connection should be preferred if it fulfills the purposes of request, as this is more robust and resilient.

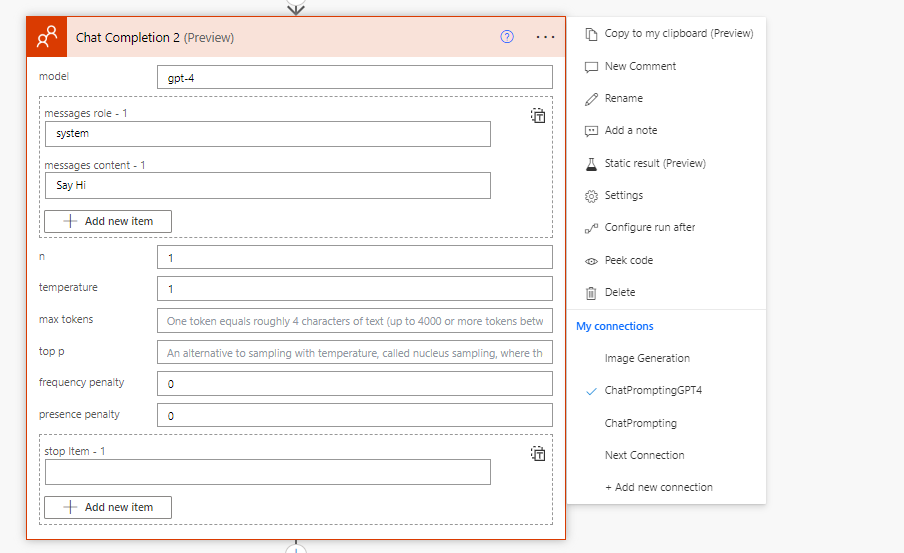

AI in PowerAutomate

I wanted to explore the possibility of integrating a Large Language Model (LLM) into Power Automate to enhance our workflows. Having previously worked with Bard (now Gemini), I looked for a native connector in Power Automate that could facilitate this integration. While I didn’t find a direct option for Bard, I discovered a connector for OpenAI's ChatGPT. To use this, I created a developer profile within ChatGPT, which opened up new opportunities to enhance our processes with AI.

Once set up, I used the LLM for a variety of purposes within our operations. One of the first applications was generating variations of email templates, which were then sent via SendGrid. This allowed me to quickly create multiple versions of emails, saving time while maintaining a personalised touch. I also used the LLM to check which email addresses had been blocked by SendGrid, ensuring that our communication remained efficient.

Another interesting application involved generating AI images to attach to new client profiles in our CMS. This provided each new lead with a unique visual identity. However, after learning about the high energy consumption involved in creating AI-generated images, I decided to move away from this practice for sustainability reasons.

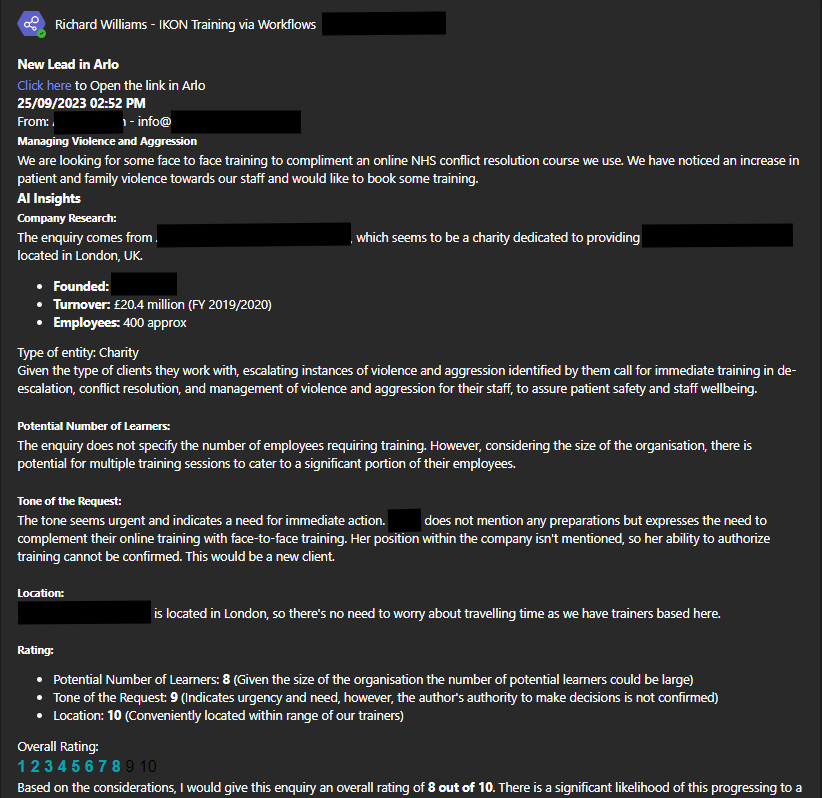

The most crucial use of the LLM was in triaging new leads that arrived via email. I designed a system where the LLM would automatically score each lead out of ten based on their likelihood to progress to a booking. To do this, I built a prompt that prepended key variables such as the lead's distance from our trainers' locations, the relevance of our current course offerings, and the tone of the request to gauge seriousness. Additionally, the LLM conducted background research on the company submitting the request, helping to prioritise high-potential leads more efficiently. This AI-assisted triage process significantly streamlined how we evaluated and followed up with new opportunities.

Developing a Learner Survey with SurveyMonkey

Another project I led involved creating a comprehensive learner feedback survey using SurveyMonkey. Initially, we considered having separate surveys tailored to different types of courses—online delivery, face-to-face, and train-the-trainer. However, upon reflection, we realised that this approach would result in inconsistent data, making it difficult to compare feedback across different delivery methods. Our goal was to gather uniform, comparable data that could provide insights across all course types.

To address this, we decided to develop a single, unified survey that branched based on the learner’s selected course type. This would allow us to maintain consistency in core questions while still gathering the specific feedback needed for each course format. The branching survey design was more complex to set up and required careful planning to ensure a smooth user experience. However, it ultimately proved more effective for data analysis.

Challenges with System Integration

One of the challenges we faced was integrating the SurveyMonkey results with our automated admin system. While the branching survey approach offered flexibility, it made it harder to link the survey responses to the automated workflows we had in place for course completion and feedback processing. The complexity of branching surveys required more advanced data handling, which was not fully compatible with our existing setup in Arlo and Power Automate. This project taught me valuable lessons about the trade-offs between survey design flexibility and system integration, and the importance of balancing technical limitations with user experience.

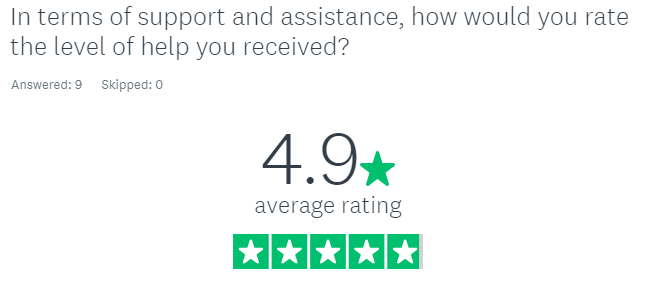

Reflection on Learner Survey Feedback

Reflecting on learner feedback was integral to our continuous improvement efforts. The feedback collected through SurveyMonkey highlighted areas for enhancement, such as simplifying the registration process and improving communication about health and safety declarations—explored further on the Wider Context page. This iterative feedback loop has been instrumental in refining our processes and ensuring that our digital solutions meet the needs of all stakeholders.

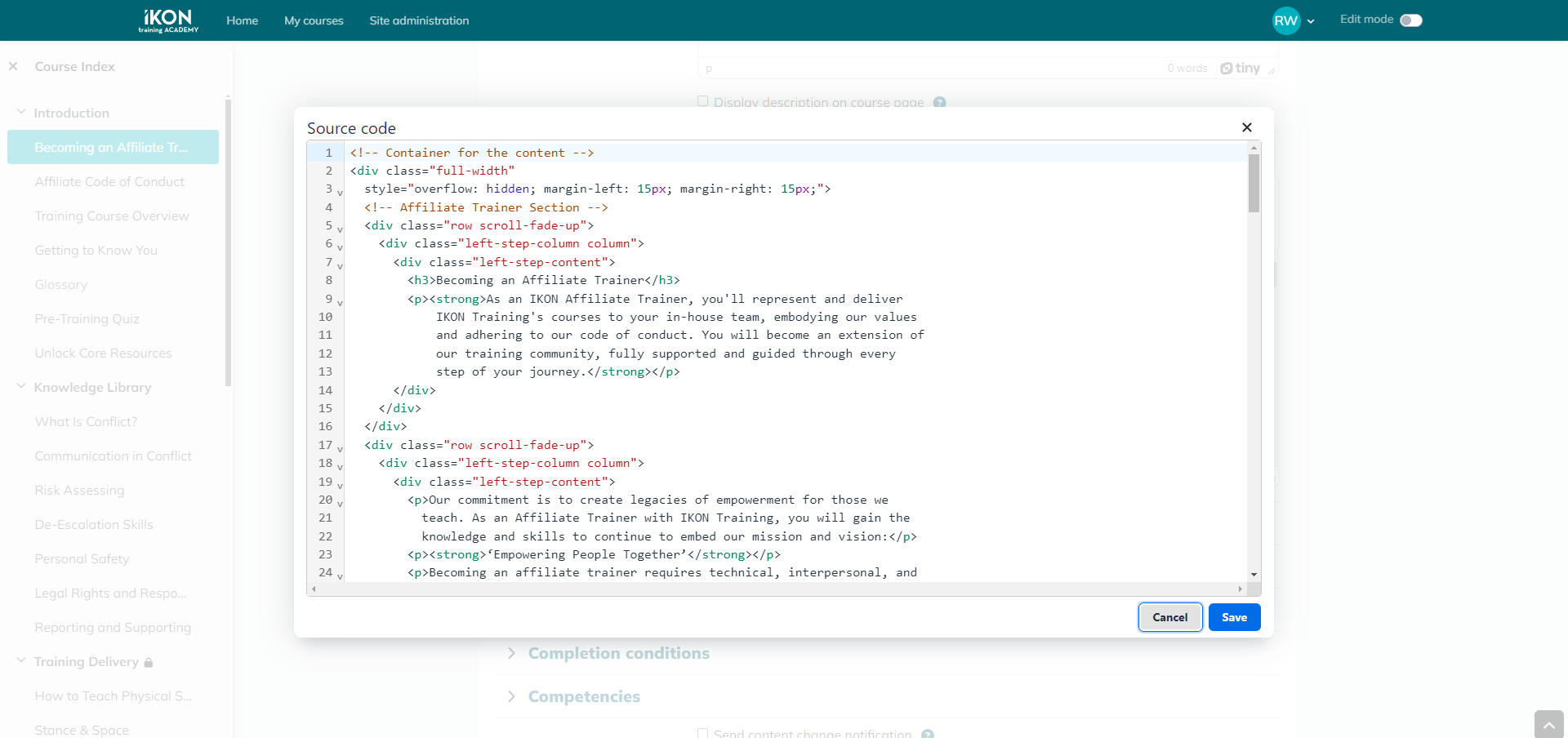

Web Development for Moodle: HTML5

In developing content for Moodle, I utilised my skills in web development, which I learnt during my BSc in Computer Science, to ensure that the platform was user-friendly, visually cohesive, and functional across devices. One of the key advantages of using HTML5 was its simplicity and flexibility. By building pages directly in HTML5, I ensured that the content could be easily modified without the need for additional dependencies. This allowed us to match the platform’s design to the corporate fonts, colours, and branding guidelines, creating a unified experience for learners.

HTML5 is the latest version of the Hypertext Markup Language used to structure web content. It offers cleaner code and better support for multimedia elements without relying on plugins like Flash, making it ideal for modern web development projects.

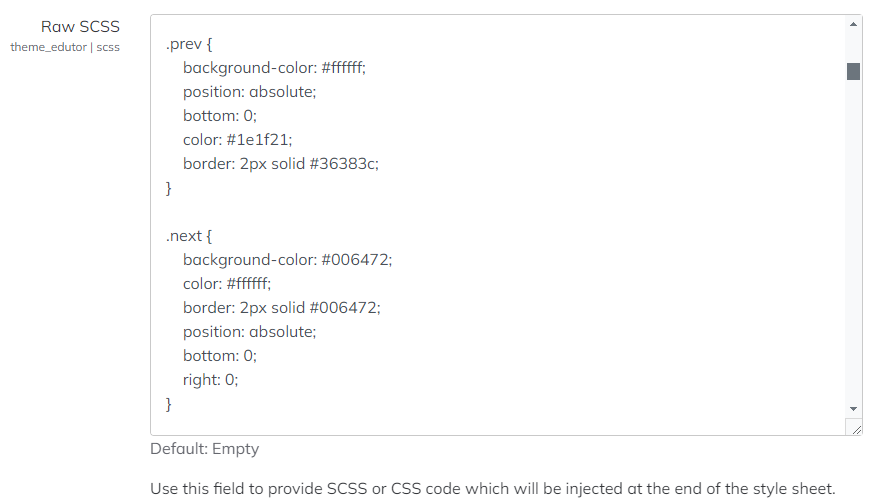

Web Development for Moodle: SCSS

For styling, I leveraged SCSS to develop a consistent look and feel throughout the site. This included designing navigation buttons that made it easier for learners to move through the platform. By using SCSS, we achieved a high degree of visual consistency across pages, ensuring that the site’s design was both responsive and accessible, particularly for mobile users.

SCSS (Sassy CSS) is a powerful preprocessor that extends regular CSS with additional features such as variables, nested rules, and mixins. This makes it easier to maintain and scale complex styles across large web projects like Moodle.

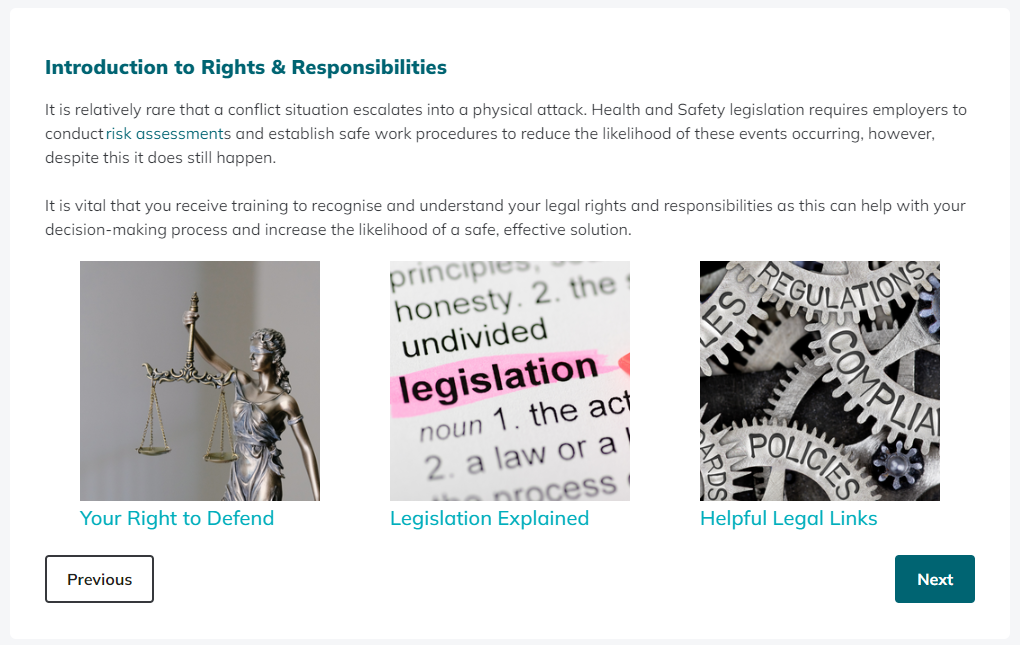

Mobile-First Design and Accessibility

This image demonstrates an example of a mobile-first, easy-to-navigate page design. I focused on simplicity, ensuring that learners could find what they needed without having to navigate the course chronologically or complete all sections at once. This approach made the platform accessible to casual learners - those with limited time - and helped to enhance the overall user experience.

All images had ALT text to enable additional accessibility, ensuring that visually impaired learners using screen readers could still understand the content and context of the images. The design adhered to WCAG (Web Content Accessibility Guidelines), ensuring that text size, contrast ratios, and navigation elements were optimised for accessibility across devices. This commitment to accessibility was key in creating an inclusive learning environment that catered to a diverse range of learner needs.

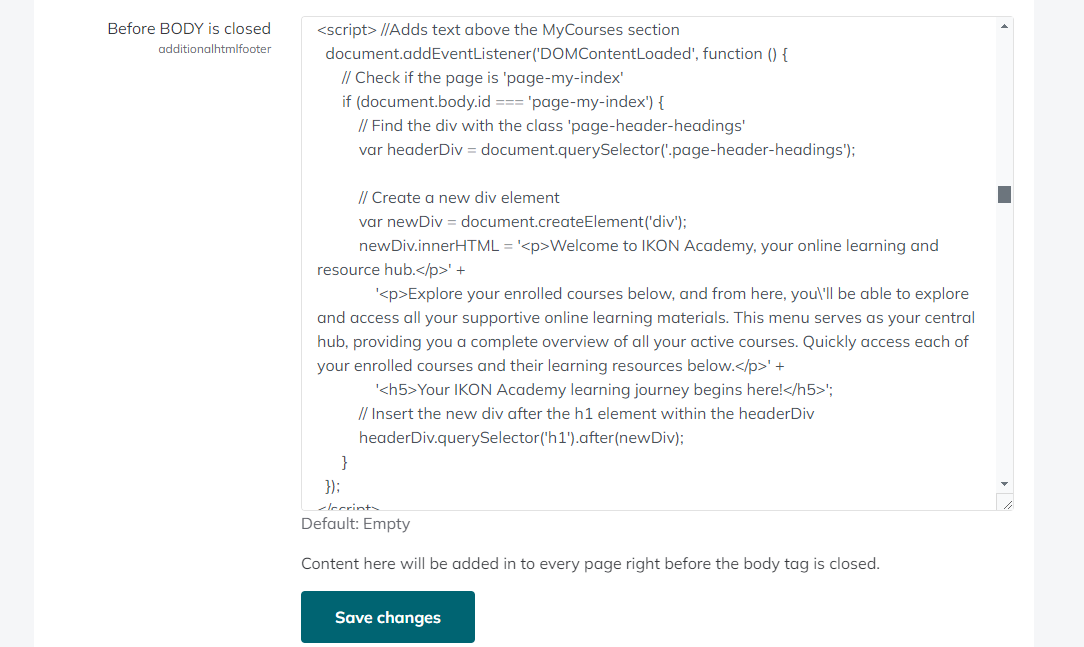

Web Development for Moodle: JavaScript

In addition to the HTML and SCSS work, I used JavaScript to add dynamic content to enhance the learner experience. One notable example is the integration of JavaScript to provide additional context on key pages, helping learners navigate the site and understand what steps to take next.

JavaScript is a versatile programming language commonly used to create interactive features on web pages. In this project, it was crucial for adding dynamic elements such as instructional prompts, improving engagement and usability for learners.

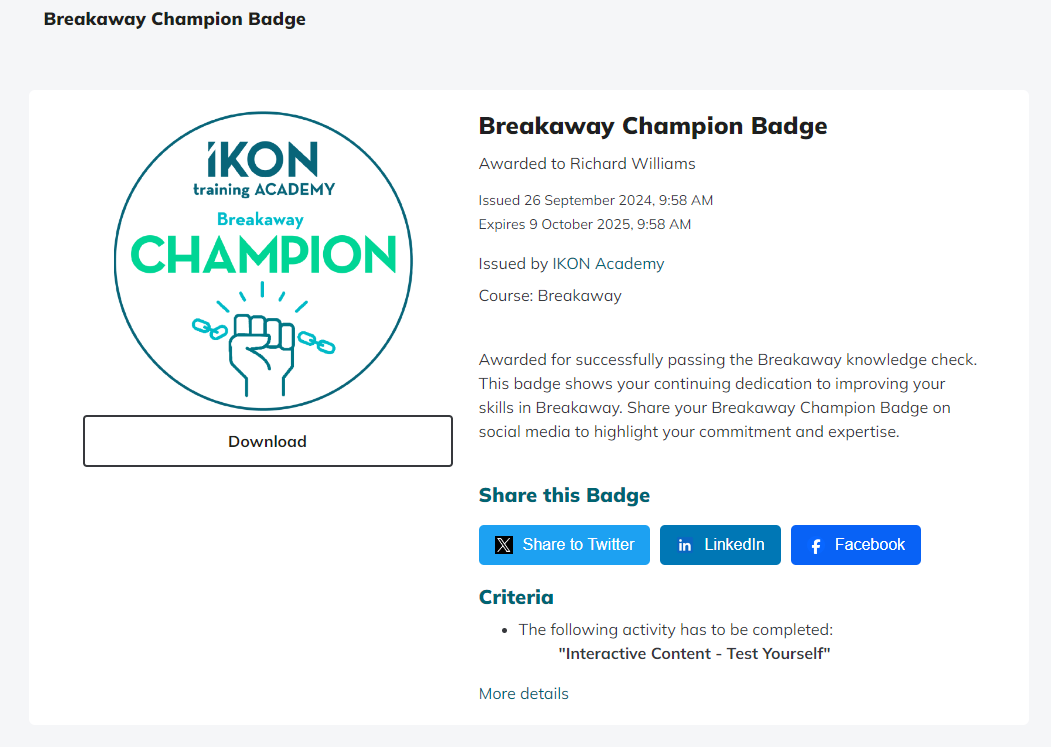

JavaScript for Dynamic Content and Sharing

Furthermore, I developed a dynamic 'Share' button for Course Badges, allowing learners to post their achievements directly to social media with pre-populated content, showcasing their success and encouraging further engagement with the platform. This functionality was developed using JavaScript to dynamically generate social media posts, allowing learners to share their progress with just a click.

All code created for this project was backed up regularly to GitHub, ensuring version control and resilience. Additionally, I shared this code with our external IT provider for added redundancy, ensuring that the system could be quickly restored if any issues arose.

Reflection on Web-Development for Moodle

Reflecting on the development of content for Moodle, I found that balancing technical precision with user-centered design was crucial. By using tools like HTML5, SCSS, and JavaScript, I was able to build a flexible and scalable platform that enhanced learner engagement and provided a consistent user experience across devices. HTML5 offered a lightweight framework that allowed for seamless updates, while SCSS ensured visual consistency, and JavaScript added dynamic, interactive elements that guided learners through their journey. Accessibility was also a key focus, ensuring that the platform met WCAG guidelines and could be easily navigated by learners with diverse needs, including those using screen readers.

While I experimented with tools like H5P for interactive content, I found it difficult to align these elements with corporate branding and styling. Although tools like Articulate or Adobe Captivate could have provided more advanced content creation options, they came with a steep learning curve and a significant cost. Given the time constraints and the need to maximise resources, I chose to rely on my existing skills in web development, which allowed me to build content with zero outlay and full control over the design and functionality. This approach not only saved time and money but also allowed for a more customised, consistent learning experience.

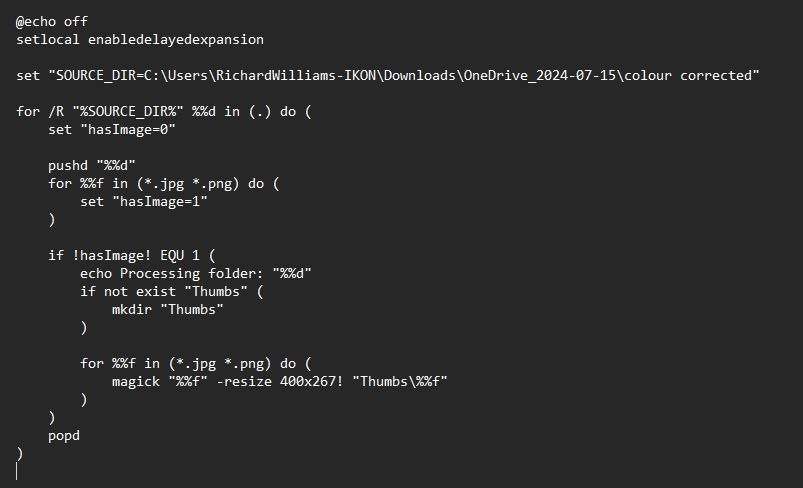

Batch Script for Image Optimisation

This script is a Batch file designed to run on Windows. I wrote it with the goal of reducing the file size of images we intended to use on our Moodle platform. By automatically resizing the images to create smaller versions (or "thumbnails"), the script helped to optimise the platform’s performance, reducing load times and improving user experience, particularly for learners with slower internet connections. To achieve this, I integrated ImageMagick, an image manipulation tool, which allowed me to resize the images efficiently within the script.

While the script successfully reduced the file sizes, I encountered an issue where multiple nested 'Thumbs' folders were created within higher-level directories. This happened because the script didn't have logic to prevent the creation of duplicate 'Thumbs' folders when it processed subdirectories. Although I fixed the issue manually by deleting the extra folders, I would have preferred to refine the script further to prevent this from happening automatically. This would have required additional logic to check for the existence of folders and prevent unnecessary nesting.

Reflection on Batch File Development

On reflection, this project highlighted the balance between developing quick, functional solutions and the time required to refine and perfect them. The script achieved its primary goal, but turning it into a more polished, user-friendly tool that could be used by the design team without manual intervention would have required further development. Additionally, this experience demonstrated the importance of investing time in understanding tools like ImageMagick, which, when combined with scripting, can provide significant efficiencies in automating repetitive tasks.

Overall, this exercise emphasised the need for careful planning and testing to ensure that even small automation scripts are robust and scalable. Had I had more time, I would have liked to turn this into a standalone application that the team could use, which would not only resize images but also address any folder-structure issues automatically. It was a valuable learning experience in understanding the trade-offs between creating a functional tool and building something that is fully refined and optimised for long-term use.

Reflection on the Development of my Technical Knowledge and Ability

Developing my technical skills has a pivotal aspect of the last few years. I have engaged in extensive training, familiarising myself with different software infrastructures, exploring their range of functionalities including APIs and the developer's documentation. As part of both the Digital Development Strategy and more recent projects, I created multiple custom automations within Zapier, Power Automate, and Azure Logic Apps. As my skills in gathering and processing data from various APIs developed, I significantly improved, from initially using no-code tools such as Zapier to make pre-determined requests, to now writing bespoke JSON requests in a pure-code automation suite. This process is ongoing, and I am now more easily able to gauge the time required to create an automation, enabling me to determine the efficacy of creating an automated process over a manual one.

Looking back, a lot of what I learned came from working through problems. Moving from Zapier to Power Automate taught me to think ahead about system limitations and resilience, although I admit that this is hard to do, especially when the documentation and reviews of software do not reveal the complexity, nor limitations of the software. Trying Azure Logic Apps helped me realise the importance of considering costs when selecting tools. And setting up API integrations showed me that sometimes you have to dig into the code to get things to work properly, especially when different systems don’t talk to each other naturally. These experiences gave me the confidence to take on more complex technical challenges, and a better understanding of which tools to choose, and when.

Next Steps in Developing Technical Skills

In the future, I want to continue building my skills with APIs and automation. As we expand our use of Moodle, I plan to use Power BI and/or other data dashboard tools to better visualise the data we're gathering on learner progress and engagement. With the ability to track key metrics, such as completion rates and quiz scores, in real time, we can make data-driven decisions to enhance learning outcomes and address any gaps. Power BI’s integration with other Microsoft tools also offers the potential for seamless reporting and insights at both the learner and organisational level.

Beyond data visualisation, I aim to further explore advanced automation solutions. Automating routine processes, such as steamlining the learner enrolment process, certificate to be stored in Moodle, and better communication workflows. These could significantly reduce administrative load. This would allow us to focus more on improving content and learner experience rather than managing repetitive or duplicitous tasks. By building on my current expertise in Power Automate, I intend to investigate more complex automations using Azure Logic Apps and explore AI-powered automations that can intelligently adapt to different scenarios. These advanced tools can help us scale our operations more efficiently, ensuring long-term cost-effectiveness.

Additionally, I am interested in expanding my understanding of API integrations beyond Moodle, exploring how other learning management systems, such as Canvas or Blackboard, could be integrated into our ecosystem. By becoming proficient in cross-platform API interactions, I can ensure smoother data exchange between different systems, enhancing both learner and administrative workflows. Another area I am keen to explore is the use of AI and machine learning within automations, particularly in predictive analytics, to anticipate learner needs or identify trends that can inform future course development and personalisation strategies.

To achieve these goals, I plan to continue professional development through online courses, industry certifications, and collaboration with technical experts. Staying up to date with the latest advancements in automation, data visualisation, and AI will enable me to future-proof our systems and keep us at the forefront of learning technology. As the landscape evolves, I aim to integrate more sophisticated tools that not only streamline our processes but also enhance the overall learning experience for our users.

Core Area 1c: Supporting the Deployment of Learning Technologies

Strategies for Supporting Deployment

Supporting the deployment of learning technologies goes beyond the technical setup. It involves understanding and addressing the needs of all stakeholders—including trainers, learners, and administrative staff—and ensuring that the implementation aligns with the organisation's goals. To do this successfully, I integrated several strategies from Change Management theory, focusing on communication, stakeholder engagement, and providing the right resources at the right time.

One key aspect of supporting deployment was recognising that different groups had unique needs and levels of comfort with new technologies. According to Lewin’s Change Management Model, it's important to ‘unfreeze’ old habits, which I did by engaging users early on and introducing them to the benefits of the new system. For example, I held workshops that framed the technology as a solution to their existing issues, helping them become more receptive to change.

From there, I ensured users were supported during the ‘change’ stage of the model by offering multiple training resources and regular touchpoints to keep users updated on progress. I also employed Kotter's 8-Step Change Model by creating a sense of urgency and guiding stakeholders through the change. These steps helped maintain momentum and made sure the implementation aligned with both immediate goals and long-term organisational vision.

Post-deployment, the focus shifted to ‘refreezing’ these changes—ensuring the new systems were embedded in daily practices. This involved creating self-service materials, providing ongoing technical support, and gathering feedback to continuously improve the deployment. The application of these change management principles ensured a smooth transition for all involved, reducing resistance and fostering long-term adoption of the new systems.

Engaging Stakeholders

One of my primary responsibilities was to ensure that all stakeholders were on board with the new systems being deployed. This began with early engagement sessions, where I gathered input from trainers, administrative staff, and even learners. During these sessions, I conducted surveys, interviews, and workshops to understand needs and concerns. For example, administrative staff were primarily concerned with the usability of the new system, while trainers wanted to ensure that they could access learner data easily without having to spend time checking details were correct. By addressing these concerns early on, I was able to tailor the deployment strategy to meet their expectations.

I also organised regular meetings with the training team to discuss progress, challenges, and upcoming milestones. This approach ensured that everyone was kept informed, and it fostered a sense of ownership among the stakeholders. The feedback loop established during these meetings was crucial in identifying any issues early and making the necessary adjustments.

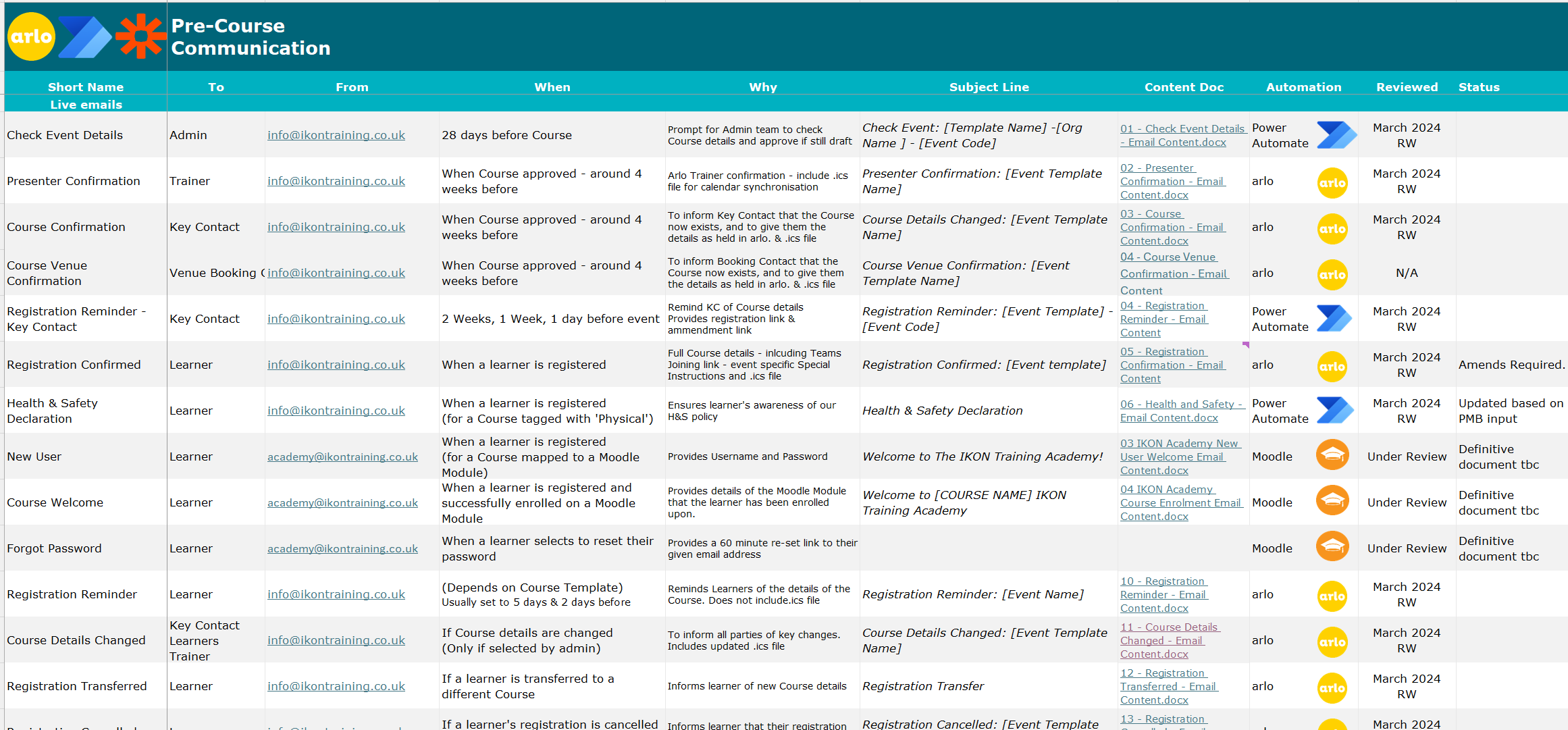

Mapping Course Communication

One challenge I overcame was creating a map that enabled those without technical abilities to easily monitor and update communication content. The complexity of the templates within Arlo, and the relatively low number of licences, meant that there were many within the organisation who could not easily access the communication templates in place for the growing number of courses, and could not easily comprehend the process of course communication: when emails were sent, their content, and to whom they were being sent.

Efficient course communication required additional automation of administrative tasks to reduce the manual workload on staff. By using tools such as Arlo and Power Automate, I created workflows that streamlined communication with learners, trainers, and key contacts, ensuring that pre-course processes such as registration and confirmation were handled smoothly and on time. However, this reduced visibility to those not involved in the operational process, such as those in marketing.

Automation allowed for timely delivery of emails and reminders, reducing the risk of missed communications and ensuring that all participants were well-prepared before the course began. It also freed up valuable time for administrative staff to focus on more critical tasks.

To ensure that all those interested could access the content of the course communication, I created the Course Communication Overview Map. This was split into Pre-Course, Post-Course, and Other, so that everyone could understand the flow of information. The origination source of the communication was listed, with a clear outline of the conditions. Crucially, each communication event had a content document that contained the content of the communication, so that this could easily be updated by anyone across the team.

Of course, the updating of this map and the associated content documents necessitated actual updates to the automation origination source: Arlo, Moodle, or the automation software—Zapier or Power Automate.

Stakeholder Collaboration

Engaging stakeholders throughout the deployment process was key to the successful adoption of learning technologies. By holding regular virtual meetings with trainers, administrative staff, and external partners, I was able to gather valuable feedback, address concerns, and ensure everyone was on board with the changes.

These collaborative sessions helped to foster a sense of ownership among the stakeholders and ensured that the deployment was aligned with the needs of all parties. By maintaining open communication channels, we were able to make timely adjustments and improve the overall implementation process.

This image is taken from a meeting with members of an organisation who had been part of a pilot of the new Affiliate Trainer process, involving members of their L&D team, their technical support team, and two Affiliate Trainers, as well as members of our Operations and Administration Team. In this meeting, we worked to overcome barriers to effective event administration, ensuring that the all needs were met across both organistaions. By having these meetings, it ensured consistency for learners, support and encouragement for trainers, and efficiency for administrators.

Change Management and Addressing Resistance

Resistance to change was a significant challenge during the deployment of new learning technologies. Many users were accustomed to the legacy systems and were hesitant to adopt new processes. To address this, I used a combination of formal training and informal support. I provided clear demonstrations of how the new systems would benefit them in their roles, such as reducing manual administrative tasks or improving access to learner data.

In addition, I worked closely with the trainers to ensure that the new technology was integrated into their daily routines in a way that was manageable. For example, the introduction of the Arlo-For-Mobile App required trainers to use their personal devices, which was initially met with resistance. I addressed this by ensuring that our IT policies covered the use of personal devices and that proper GDPR and security measures were in place. I also provided one-on-one support to trainers who were struggling with the app, which helped to build confidence and increase adoption rates.

Providing Training and Support

To ensure a smooth transition to the new learning technologies, I provided tailored training sessions for different user groups. These sessions included hands-on workshops, step-by-step guides, and video tutorials that were accessible on demand. I recognised that different users had varying levels of technical proficiency, so I made sure to adjust the content accordingly. Trainers, for instance, were provided with more in-depth training on how to use features related to learner data tracking and assessment, while administrative staff received training on managing enrolments and accessing reports.

I also hosted drop-in clinics, both virtually and in-person, to support users who needed additional help. This was particularly useful for those who were less confident with technology, as it provided them with the opportunity to ask questions and receive real-time support. These clinics also helped me identify common issues that users were facing, which informed the development of further training materials.

Supporting the deployment of this technology involved more than just technical work. I played a crucial role in strategising the rollout of our eLearning platform, working closely with the training team to ensure a smooth transition. This process included conducting training sessions for staff, preparing support materials, and establishing a feedback loop with learners to continually improve the platform. I also drafted content for our clients that was released in a series of posts on our website: here and here.

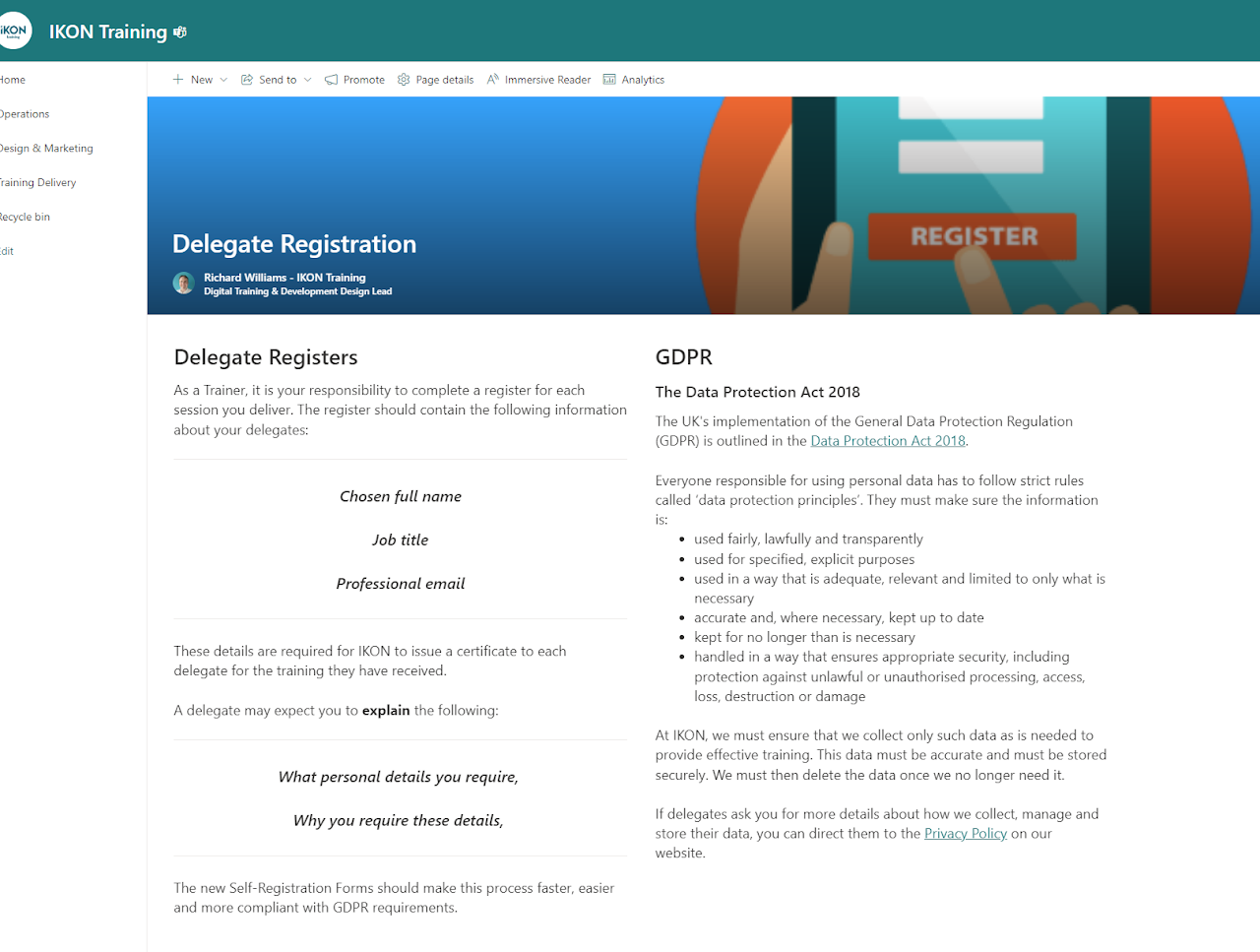

Digital Support Resources

Change management is most effective when users are provided with the resources they need to independently solve problems or adapt to new processes. In managing the deployment of the self-registration process, I recognised that not everyone prefers face-to-face support. Some users prefer to refer back to a help guide at their own pace, while others might prefer direct communication, such as a call or a quick Teams message.

To address this, I created digital support resources that were accessible to all trainers through SharePoint. This included step-by-step guides for the new self-registration process, with clear explanations on GDPR compliance. By providing multiple means of support—written guides, email, Teams, and phone calls—I ensured that users could access the help they needed in the format they were most comfortable with. This approach aligns with Kotter’s Change Management theory, which highlights the importance of empowering individuals to act and removing barriers to change.

By making these resources readily available, I helped ease the transition and encouraged trainers to upskill independently, ultimately reducing their reliance on direct, time-consuming support and allowing for smoother adoption of the new process.

Supporting the Deployment of Learning Templates

At the University of Suffolk, I played a pivotal role in facilitating online learning through the LMS, BrightSpace. Recognising the needs of our partner, East Coast College, I spearheaded the creation and deployment of a series of new Course Templates for their online modules and learning materials.

East Coast College Templates

The creation of these templates was crucial for East Coast College, which had struggled with coordinating similar projects, with academics uncertain about best practices and no dissemination of expertise. My modifications to existing university templates not only personalised the learning experience for students but also significantly saved time for academics.

Improving Efficiency and Personalisation

I used auto-generated placeholder text features, a feature of the BrightSpace LMS, to personalise the module in various places—from greetings, course information pages, and assessment instructions—enhancing the narrative journey for learners through each module and setting the tone of communication across the modules.

Upskilling and Training

To ensure effective use of these templates, I conducted over 10 hours of hybrid group-training sessions for the college staff, supplemented by asynchronous online support with digital learning guides and help resources, such as videos and presentations.

Project Outcomes

This initiative was highly successful, with the entire college efficiently using the templates throughout the year. The project not only streamlined the creation of online materials but also anticipated an uplift in student satisfaction with their online learning experiences.

Learner Engagement and Time Tracking

Tracking learner engagement on the platform is essential for improving the eLearning experience. By monitoring the time learners spend on pre-course and post-course activities, we can identify where they may struggle or succeed and make data-driven improvements to course design.

Using this data, I was able to identify that one learner spent an unusual amount of time on the platform (74 minutes), which prompted further investigation into the course structure and engagement levels. These insights allowed for targeted improvements in content and support resources, ensuring a better learning experience for future cohorts.

Reflections

Reflecting on the deployment process, I learned that effective support requires a balance between technical knowledge and interpersonal skills. Engaging stakeholders early and providing ongoing support were key to a successful rollout. One of the biggest lessons was the importance of adaptability—being willing to adjust the deployment plan based on user feedback made the implementation smoother and increased overall satisfaction.

Another lesson learned was the value of creating multiple support channels. Not everyone learns best in a workshop setting, and providing a range of support options—such as video tutorials, written guides, and live support—helped ensure that all users could find the help they needed in a format that worked for them.

Reflecting on this journey, I recognise that the successful implementation of learning technology is not just about choosing the right tools but understanding how these tools fit within the broader educational context. Each tool needs to serve a purpose, whether linked to enrolment and registration, gathering of feedback/survey data, or the hosting of learning resources. Balancing the technical aspects with pedagogical considerations and stakeholder engagement has been a rewarding challenge. There is significant progress still to be made, particularly around increasing the capacity of our systems and better processing the vast quantity of data we are now producing.

Next Steps in Supporting Learning Technologies

Moving forward, I aim to enhance the support provided by incorporating more advanced analytics to track system usage and identify areas where users may need additional help. This data-driven approach will allow me to be more proactive in supporting users and ensure that the learning technologies are being utilised to their full potential. I also plan to develop more self-service resources, such as an expanded FAQ section and interactive tutorials, to further empower users to solve problems independently.

This process has not only enhanced my technical skills but also deepened my understanding of how technology can be harnessed to create impactful learning experiences. In the near future, I hope to apply data analysis processes to our databases to better develop our operational processes, improving operational efficacy and providing learners with better metrics on their performance. I expect this development to occur naturally as we build more learning courses within Moodle and have more learners progressing through their learning journeys. You can read more about this on the Next Page - Teaching and Learning.